Guidelines Overview

Our goal is always to provide you with the most useful and relevant information. Any changes we make to Search are always to improve the usefulness of results you see.

See how real people make Google Search better

Search has changed over the years to meet the evolving needs and expectations of the people who use Google. From innovations like the Knowledge Graph , to updates to our systems that ensure we’re continuing to highlight relevant content, our goal is always to improve the usefulness of your results. That is why, while advertisers can pay and be displayed in clearly marked ad sections, no one can buy better placement in the Search results .

We put all possible changes to Search through a rigorous evaluation process to analyze metrics and decide whether to implement a proposed change. Data from these search evaluations and experiments go through a thorough review by experienced engineers and search analysts, as well as other legal and privacy experts, who then determine if the change is approved to launch. In 2023, we ran over 700,000 experiments that resulted in more than 4,000 improvements to Search.

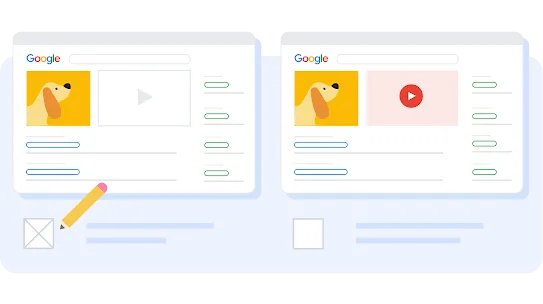

Running experiments

First, we enable the feature in question to just a small percentage of people, usually starting at 0.1%. We then compare the experiment group to a control group that did not have the feature enabled.

Analyzing metrics

Next, we look at a very long list of metrics, such as what people click on, how many queries were done, whether queries were abandoned, or how long it took for people to click on a result.

Measuring engagement

Finally, we use these results to measure whether engagement with the new feature is positive, to ensure that the changes we make are increasing the relevance and usefulness of our results for everyone.